MORU

Date

August 25th, 2024

Client

E-commerce

Services

GITHUB ACTIONS CI/CD

AWS EKS

Helm Charts

Building a Production-Ready EKS Cluster with Terraform

Introduction

Amazon Elastic Kubernetes Service (EKS) has become a go-to solution for organizations looking to run containerized applications at scale. While AWS provides a managed Kubernetes control plane, setting up a production-ready EKS environment involves numerous components and best practices that need careful consideration.

In this article, I’ll walk through a complete Terraform implementation that automates the deployment of a production-grade EKS environment. This implementation is available in my GitHub repository: https://github.com/prasad-moru/AWS_EKS_TF.

Project Objectives

The main goals of this implementation are:

- Infrastructure as Code: Manage the entire AWS EKS infrastructure using Terraform

- Multi-Environment Support: Enable deployment across development, staging, and production environments

- Modularity: Create reusable Terraform modules for key components

- Security: Implement AWS security best practices including least privilege IAM roles

- Networking: Configure proper VPC segmentation with public and private subnets

- Add-ons: Include essential components like EBS CSI Driver and ALB Ingress Controller

- Container Registry: Set up ECR repositories with appropriate lifecycle policies

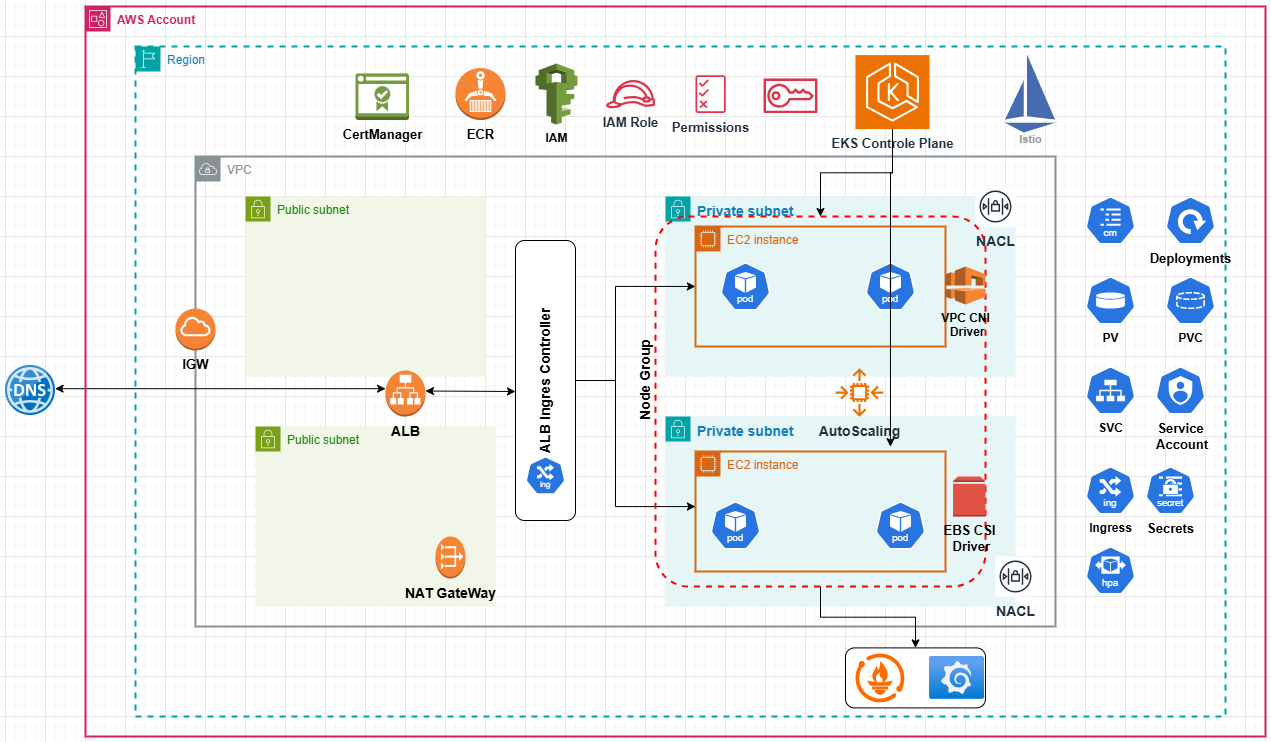

Architecture Overview

The architecture follows AWS best practices with the following components:

- VPC: Custom VPC with proper isolation between resources

- Networking: Public and private subnets across multiple availability zones

- EKS Control Plane: Managed Kubernetes control plane

- Node Groups: Auto-scaling EC2 instances in private subnets

- Load Balancing: AWS Load Balancer Controller for ingress management

- Storage: EBS CSI Driver for persistent volume support

- Security: Defense-in-depth approach with security groups, NACLs, and IAM roles

- Container Registry: ECR repositories for storing container images

Project Structure

The repository is organized with a modular approach:

.

├── .gitignore

├── README.md

├── backend.tf # Terraform state configuration

├── main.tf # Main entry point

├── outputs.tf # Output definitions

├── providers.tf # Provider configurations

├── variables.tf # Variable definitions

├── modules/ # Reusable modules

│ ├── vpc/ # VPC configuration

│ ├── eks/ # EKS cluster configuration

│ ├── ebs-csi/ # EBS CSI Driver configuration

│ ├── alb-ingress/ # ALB Ingress Controller

│ └── ecr/ # ECR repositories

├── environments/ # Environment-specific configurations

│ ├── development/

│ ├── staging/

│ └── production/

└── kubernetes-workloads/ # Sample Kubernetes manifests

Key Components

VPC Configuration

The VPC module creates a network foundation with proper segmentation:

module "vpc" {

source = "./modules/vpc"

name = local.name

cidr = var.vpc_cidr

azs = local.azs

subnet_cidr_bits = var.subnet_cidr_bits

cluster_name = local.cluster_name

tags = local.vpc_tags

}

Key features:

- Public subnets with internet gateway access

- Private subnets with NAT gateway for outbound traffic

- Proper tagging for EKS and ALB Ingress Controller

- Multi-AZ deployment for high availability

EKS Cluster

The EKS module provisions a managed Kubernetes control plane:

module "eks" {

source = "./modules/eks"

cluster_name = local.cluster_name

cluster_version = var.eks_version

vpc_id = module.vpc.vpc_id

private_subnet_ids = module.vpc.private_subnet_ids

public_subnet_ids = module.vpc.public_subnet_ids

cluster_role_arn = aws_iam_role.cluster.arn

node_security_group_id = aws_security_group.nodes.id

enable_core_addons = false

tags = local.eks_tags

}

The node groups are created with auto-scaling capabilities:

resource "aws_eks_node_group" "this" {

cluster_name = module.eks.cluster_name

node_group_name = local.node_group_name

node_role_arn = aws_iam_role.node.arn

subnet_ids = module.vpc.private_subnet_ids

scaling_config {

desired_size = var.eks_node_desired_size

max_size = var.eks_node_max_size

min_size = var.eks_node_min_size

}

# Additional configuration...

}

IAM Security

The implementation follows AWS security best practices by creating dedicated IAM roles with least privilege:

resource "aws_iam_role" "cluster" {

name = "${local.cluster_name}-role"

assume_role_policy = jsonencode({

Version = "2012-10-17"

Statement = [

{

Effect = "Allow"

Principal = {

Service = "eks.amazonaws.com"

}

Action = "sts:AssumeRole"

}

]

})

# Tags and additional configuration...

}

For the worker nodes:

resource "aws_iam_role" "node" {

name = "${local.node_group_name}-role"

assume_role_policy = jsonencode({

Version = "2012-10-17"

Statement = [

{

Effect = "Allow"

Principal = {

Service = "ec2.amazonaws.com"

}

Action = "sts:AssumeRole"

}

]

})

# Tags and additional configuration...

}

The implementation also includes OIDC provider integration, allowing Kubernetes service accounts to assume IAM roles:

resource "aws_iam_openid_connect_provider" "eks" {

client_id_list = ["sts.amazonaws.com"]

thumbprint_list = [data.tls_certificate.eks.certificates[0].sha1_fingerprint]

url = module.eks.cluster_identity_oidc_issuer

# Tags and additional configuration...

}

Storage with EBS CSI Driver

The EBS CSI Driver module enables Kubernetes persistent volumes:

module "ebs_csi" {

source = "./modules/ebs-csi"

cluster_name = module.eks.cluster_name

oidc_provider_arn = aws_iam_openid_connect_provider.eks.arn

oidc_provider_url = aws_iam_openid_connect_provider.eks.url

}

This module:

- Creates the necessary IAM role with the

AmazonEBSCSIDriverPolicyattachment - Installs the EBS CSI Driver as an EKS add-on

- Supports optional KMS encryption for EBS volumes

ALB Ingress Controller

For external access to Kubernetes services, the ALB Ingress Controller is configured:

module "alb_ingress" {

source = "./modules/alb-ingress"

count = var.enable_alb_ingress ? 1 : 0

cluster_name = module.eks.cluster_name

vpc_id = module.vpc.vpc_id

oidc_provider_arn = aws_iam_openid_connect_provider.eks.arn

oidc_provider_url = aws_iam_openid_connect_provider.eks.url

}

This module handles:

- IAM roles with proper permissions for managing AWS resources

- Installation of the AWS Load Balancer Controller using Helm

- Subnet discovery configuration for ALB placement

- Optional WAF and Shield integration for enhanced security

Container Registry (ECR)

To store container images, ECR repositories are created:

module "ecr" {

source = "./modules/ecr"

repository_names = var.ecr_repository_names

image_tag_mutability = var.ecr_image_tag_mutability

scan_on_push = var.ecr_scan_on_push

enable_lifecycle_policy = var.ecr_enable_lifecycle_policy

max_image_count = var.ecr_max_image_count

node_role_arn = aws_iam_role.node.arn

# Tags and additional configuration...

}

Key features:

- Image scanning for security vulnerabilities

- Lifecycle policies to limit stored images

- Repository policies for access control

Multi-Environment Support

The repository supports multiple environments (development, staging, production) through environment-specific directories:

environments/

├── development/

├── staging/

└── production/

Each environment has its own:

- Backend configuration for state management

- Variables with environment-specific values

- Outputs for environment-specific resources

This approach enables consistent infrastructure deployment across environments while allowing for environment-specific customizations.

State Management

The project uses an S3 backend with DynamoDB locking for state management:

terraform {

backend "s3" {

bucket = "aws-eks-tt-automation"

key = "environments/development/terraform.tfstate"

region = "us-east-1"

encrypt = true

dynamodb_table = "terraform-state-lock"

}

}

This provides:

- Safe storage of Terraform state

- State locking to prevent concurrent modifications

- Encrypted state for security

Deployment Process

To deploy the infrastructure:

- Clone the repository:

git clone https://github.com/prasad-moru/AWS_EKS_TF.git cd AWS_EKS_TF - Create an S3 bucket and DynamoDB table for state management:

aws s3api create-bucket --bucket aws-eks-tt-automation --region us-east-1 aws dynamodb create-table \ --table-name terraform-state-lock \ --attribute-definitions AttributeName=LockID,AttributeType=S \ --key-schema AttributeName=LockID,KeyType=HASH \ --provisioned-throughput ReadCapacityUnits=5,WriteCapacityUnits=5 \ --region us-east-1 - Create a

terraform.tfvarsfile with your configurations:aws_access_key = "your-access-key" aws_secret_key = "your-secret-key" region = "us-east-1" project = "YourProject" environment = "dev" - Initialize, plan, and apply:

terraform init terraform plan terraform apply - Configure kubectl to connect to your cluster:

aws eks update-kubeconfig --name <cluster-name> --region <region>

Sample Kubernetes Deployments

The repository includes sample Kubernetes manifests for testing:

# nginx-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

labels:

app: nginx

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:latest

ports:

- containerPort: 80

resources:

requests:

cpu: "100m"

memory: "128Mi"

limits:

cpu: "500m"

memory: "512Mi"

These can be deployed with:

kubectl apply -f kubernetes-workloads/nginx-deployment.yaml

kubectl apply -f kubernetes-workloads/nginx-service.yaml

kubectl apply -f kubernetes-workloads/nginx-ingress.yaml

Security Hardening

The implementation includes several security best practices:

- Network Segmentation: Nodes are in private subnets with controlled access

- IAM Least Privilege: Roles with minimum necessary permissions

- Security Groups: Tightly controlled communication paths

- OIDC Federation: Service-account level permissions

- ECR Scanning: Automatic vulnerability scanning

- Private Endpoint Access: API server accessible within VPC

Monitoring and Management

While not fully implemented, the repository structure supports integration with monitoring tools:

monitoring/

└── grafana/

└── dashboards/

└── kubernetes-overview.json

This can be expanded to include CloudWatch, Prometheus, and other observability solutions.

Customization Options

The implementation is highly configurable through variables:

variable "eks_node_instance_types" {

description = "EC2 instance types for EKS node groups"

type = list(string)

default = ["t3.medium"]

}

variable "eks_node_desired_size" {

description = "Desired number of worker nodes"

type = number

default = 2

}

This allows for easy customization without modifying the core code.

Conclusion

This EKS Terraform implementation provides a solid foundation for running containerized workloads on AWS. It follows best practices for security, scalability, and maintainability, making it suitable for both development and production use.

Key takeaways:

- Infrastructure as Code enables consistent, repeatable deployments

- Modular design allows for reusability and clear separation of concerns

- Multi-environment support facilitates the development lifecycle

- Security is implemented at multiple layers

- Core Kubernetes add-ons are included for production readiness

For more details and to contribute, visit the GitHub repository: https://github.com/prasad-moru/AWS_EKS_TF.